Table of Contents

PeCoH

The objectives of the Performance Conscious HPC (PeCoH) project are, firstly, to raise awareness and knowledge of users for performance engineering, i.e., to assist in identification and quantification of potential efficiency improvements in scientific codes and code usage. Secondly, the goal is to increase the coordination of performance engineering activities in Hamburg and to establish novel services to foster performance engineering that are then evaluated and implemented on participating data centers. The coordination is implemented by setting up and establishing a Hamburg regional HPC competence center (HHCC) as virtual organization that will be contributing to federalized HPC infrastructure of HLRN and the Gauß-Alliance. The HHCC will combine the strengths of the university data center (Regionales Rechenzentrum, RRZ), the German Climate Computing Center (DKRZ) and the data center of Technical University Hamburg-Harburg (TUHH).

People involved from WR

- Prof. Dr. Julian Kunkel (Ansprechpartner)

Organization

Principal Investigators

Thomas Ludwig – University of Hamburg / DKRZ

The Scientific Computing group of Prof. Thomas Ludwig has a long history in parallel file system research but also investigates energy efficiency and cost-efficiency aspects and has developed tools for performance analysis. Prof. Ludwig is the director of German Climate Computing Center (DKRZ). The group is embedded into the German Climate Computing Center (DKRZ) since 2009 and, thus, also addresses important aspects for earth system scientists. With regards to teaching, the group offers interdisciplinary seminars and other courses about software engineering in science since 2012.

Matthias Riebisch – University of Hamburg / SWK

The Software Construction Methods group (In German: Softwareentwicklungs- und -konstruktionsmethoden – SWK) of Prof. Matthias Riebisch and his prior group at the Ilmenau University of Technology has experience in the adaptation and optimization of software architectures and software development processes. This includes for example, measuring software quality properties, the forecast of properties after changes using impact analysis techniques, and methods for partitioning software applications for optimized execution on parallel computing platforms.

Stephan Olbrich – University of Hamburg / RRZ

The Scientific Visualization group of Prof. Stephan Olbrich focuses on the development of methods for parallel data extraction and efficient rendering for volume and flow visualization of high-resolution, unsteady phenomena. Prof. Olbrich is the director of Regional Computing Center (RRZ). The group integrated their software into HPC applications, e.g., for climate research. Their activities are part of the cluster of excellence Integrated Climate System Analysis and Prediction (CliSAP) that focuses on post-processing data sets as well as on parallel data extraction at the runtime as part of the simulation.

Associated Partners

German Climate Computing Center / Deutsches Klimarechenzentrum (DKRZ)

DKRZ has long been devoted one of its departments to user consultancy. They support DKRZ’s users in the effective use of its systems by providing general personal advice on the use of the systems, helping users to port applications to the HPC systems, and by offering conceptual guidance on parallelization and optimization strategies for user code, specifically with respect to the provided HPC system.

Regional Computing Center / Regionales Rechenzentrum der Universität Hamburg (RRZ)

The HPC team at RRZ operates a 396 node Linux cluster and more than 2 PByte of disk storage. The team is part of the consulting network of the North German Supercomputing Alliance (Höchstleistungsrechenzentrum Nord (HLRN) in German). HPC activities (locally and for HLRN) include user support, user education (in parallel programming and single-processor optimization) and benchmarking. RRZ maintains and further develops BQCD (Berlin quantum chromodynamics program) as one of the HLRN application benchmark codes: BQCD (Download) (see also M. Allalen, M. Brehm, and H. Stüben. Performance of Quantum Chromodynamics (QCD) Simulations on the SGI Altix. Computational Methods in Science and Technology, 14(2):69–75, 2008).

Computer Center of Hamburg University of Technology / RZ der Technischen Universität Hamburg (TUHH RZ)

The TUHH RZ has HPC consultants to support local users as well as users of the North German Supercomputing Alliance (HLRN). TUHH RZ operates a 244 nodes Linux cluster. TUHH RZ and RRZ cooperate in sharing specialized parts of their HPC hardware.

Project Management

Funding

This project is funded by the DFG in the call Performance Engineering.

Please also see other funded projects in the call.

Work Program

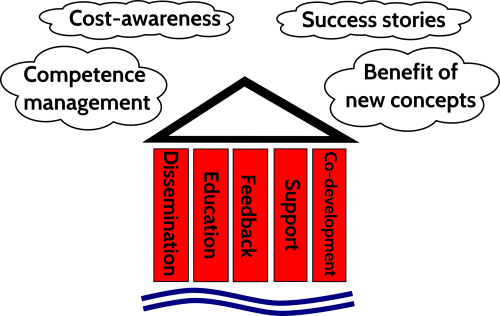

The foundation of our work plan implements services on the HHCC for scientists of different fields. Those services offer basic support for performance engineering, co-development, and education. Furthermore they provide feedback for users and collect as well as disseminate knowledge for users' support. Orthogonal to those services, we research methods to raise user awareness for advanced performance engineering. The proposed methods cover cost-awareness, competence management, the demonstration of benefit via success stories and the quantification of benefit for alternative processing concepts.

An overview of the methods and services is given in the figure. Both services and methods benefit from each other: While services transfer computer science knowledge to scientists, they also identify and channel user needs and experience that is fed into the method development. The research strategies described by the methods are evaluated and resulting analyses are pushed into the service ecosystem. All the methods and services mentioned in the following will be implemented in close collaboration with the three data centers.

Methods

Competence management

There are conceptual competences relevant for most data centers – such as understanding the resource management, and hardware/software-specific competences like the performance expectation of collective MPI operations. We will increase transparency on the benefit of HPC competences, especially those relevant for performance engineering. Therefore, relevant competences are identified and classified according to necessity and usefulness for different scientific domains. We will also systematically analyze the need for performance optimization from the perspectives of a scientist and a data center. To make the competences more visible in the community, we will establish HPC certification levels. We will bundle teaching material required to master the various certification levels and organize standardized online examinations to acquire the certificates.

Cost-awareness

Executing a scientific application and keeping data is costly for the data center and, unfortunately, users are not aware of these costs. Understanding the cost for running applications and keeping data is important in identifying the benefit of optimization – which is costly in terms of brainware. We will develop models to approximate costs for scientists and foster the discussion with the open-source community to embed cost-efficiency computation into job summaries of resource managers. Additionally we will track the resource utilization and efficiency of applications and the data centers and provide this information to the users.

Success stories

According to our experience, demonstrating the benefit of performance engineering – in terms of better productivity for scientists – is needed to reach better acceptance. Therefore, we will create and publish success stories from different scientific fields. To achieve this goal, we will cooperate with scientists and attend to them throughout the project period. We will also gather existing and upcoming optimization studies and compute their respective cost-benefit using our cost-models. While there are already many studies available, finding the appropriate information is a daunting task. Therefore, we will investigate methods to increase searchability of and navigation between relevant performance data.

Benefit of (new) concepts

Together with domain scientists, we will estimate and evaluate the benefit of novel concepts for running their experiments based the individual scientific use cases. During the project runtime, we will approach users, discuss the benefit of alternative architectures, programming concepts and workflow systems, and model this benefit to estimate its value. For certain use cases, tools and concepts from Big Data analytics, in-situ visualization but also software engineering yield the potential to significantly improve the scientific outcome – and in the meantime increase data center efficiency. Also, compiler-assisted debugging tools to speed up the development process will be investigated. Our results will be monitored using the developed survey and published as a (success) story.

Services

Dissemination

This service will disseminate knowledge, education, concepts and chances of performance engineering. Therefore, we will establish the virtual organization for the HHCC and publish all results and methodological concepts together with educational and supporting material (such as a knowledge base) there. In our experience, it is very difficult to find relevant information, therefore, we explore approaches to increase searchability of this information.

Education

This service gives users the opportunity to elaborate on their HPC (and especially performance engineering) skills. We will establish HPC competence certificates (``HPC-Führerschein'') on various levels. Therefore, we will package online educational resources (OER) from existing material and extend it towards useful online courses. This fits very well into the Hamburg Open Online University and we will collaborate with this initiative to improve the pedagogical quality of the courses. Available courses in the region will be summarized and shown on the web page of the HHCC. Together with the certification process, this will help users to understand and improve their performance optimization skills.

Feedback

This service provides pro-actively feedback to the users and gives them metrics to reflect about their performance – how well do their codes run. Thus, it identifies opportunities to improve the current situation. Firstly, we support deployment of an infrastructure to measure and assess resource utilization on the application level but also quantify costs. We then elaborate on tools that create semi-automatically feedback to the users about the efficiency of their runs. The collected information is periodically reviewed by our user support to identify performance issues and initiate conversation with the users to work upon them.

Support

This service aids users that seek help explicitly to identify and mitigate performance issues. Since performance optimization of individual applications is time consuming, the focus of the support is to identify issues in preparation and configuration of of individual applications and workflows. Optimizing these low-hanging fruits also promises significant performance improvements and can be transferred easier between scientists.

Co-Development

In this service, a few joint efforts with scientists are implemented to evaluate and utilize new software concepts such as programming languages and tools but also understand the potential of novel architectures and processing systems. This is achieved by inferring knowledge from existing studies and by providing simplified performance and cost models for those alternatives. Together with scientists we establish pilot studies to support re-write of existing codes and document those results as success stories. While we conduct the co-development, we will periodically capture the fraction of work time spent in different tasks, e.g., design, programming and runtime. This will allow to understand the required programming time and combined with our cost analysis, the cost-efficiency of novel approaches can be made more visible. To allow other scientists to conduct similar studies, we will develop and publish a quality control method for conducting surveys that assess the benefit of systematic performance engineering.

Deliverables

-

- This deliverable contains the first annual report and it includes information about the project progress, status and next steps. For the sake of completeness, the report gives a brief overview of the major project goals, the partners and scientific institutions involved in the project. The relevant information about the general organization of the project (e.g. about a delayed project start due to the difficulties in hiring of qualified scientific employees) is also included. The results achieved in the six work packages are listed in more detail in the technical section of the report. The emphasis is on modeling HPC usage costs to calculate costs and statistics for SLURM jobs and on developing an HPC certification program to improve the education of HPC users.

-

- This deliverable contains the second and third annual report summarized as a single report. It includes information about the project progress, status, and sustainability. For the sake of completeness – similar to the first annual report (D1.1) – the report gives a brief overview of the major project goals, as well as the partners and scientific institutions involved in the project. The relevant information about the general organization of the project and required adjustments is also included. The results achieved in the six work packages are listed in more detail in the technical section of the report. The emphasis is on performance and software engineering concepts, tuning parallel programs without modifying the source code, an automatic tuning approach using a Black Box Optimizer tool (based on genetic algorithms), and success stories based on best practices to support scientists in their daily work.

-

- This deliverable presents a collection of performance engineering and software engineering concepts that can help scientists to improve the development of scientific software. Additionally included is an evaluation of the concepts against selected criteria in order to show their benefits when applied in scientific programming.

-

- This deliverable is based on the insight that software engineering methods can increase productivity by providing scaffolding for the collaborative programming, reducing the coding errors and increasing the manageability of software. It describes how a subset of software engineering concepts have been chosen for the study that are considered suitable and useful for scientists during their programming tasks. The deliverable also contains a tutorial that teaches the selected concepts. An experience report on the code co-development process is constituted and some solutions for further improvement of the code co-development process are additionally proposed.

-

- This deliverable discusses the modelling of HPC usage costs. For this purpose four distinct cost models (from simple to more complex) were developed. Furthermore, two tools were developed to apply the four cost models to SLURM jobs: The first tool is supposed to be run from the job-epilogue script and reports the cost of a single job. The second tool is supposed to be run from the command line and calculates costs and statistics for a set of selected jobs based on the accounting records of SLURM.

-

- This deliverable is comprised by the code for reporting job costs.

-

- This deliverable discusses the identification of HPC competences and a new approach for an HPC certification program. Our approach is based on an HPC skill tree that supports different views on the HPC content by the help of additional attributes to define the level of a skill (Basic, Intermediate, or Expert), its suitability for the role of a user, and its suitability for a scientific domain. We strictly separate the certificate definition from content providing (similar to the concept of a “Zentralabitur”) and assume that collaborating scientific institutions will complement each other in producing content, whereas the certification board has the power to establish generally accepted certificate definitions and corresponding exams without the burden of being responsible for the content.

-

- This deliverable contains the workshop material to gain basic level HPC skills. It covers topics like System Architectures, Hardware Architectures, I/O Architectures, Performance Modeling, Parallelization Overheads, Domain Decomposition, Job Scheduling, Use of the Cluster Operating System, Use of a Workload Manager, and Benchmarking.

-

- This deliverable is available online via the Hamburg HPC Competence Center (HHCC) website. The tutorial is also available in text form (PDF).

-

- This deliverable contains the description of the prototypical process to facilitate multiple choice tests for the online examination of HPC skills. It is based on the insight that trust in the generated certificates is important and it describes the overall architecture and security concept of our solution. The process strategy to mitigate potential cheating attempts is based on the creation of a big question pool, the users‘ registration, and the technical verifiability, based on cryptographic methods, of the tests and the certificates.

-

- This deliverable focuses on increasing the runtime performance of parallel applications by tuning parallel programs without – or nearly without – modifying the source code, e.g. by setting appropriate runtime options and selecting the best performing compiler and MPI environment for each specific program. Also included are experiments for finding good settings for using the standard software packages Gaussian and MATLAB in an HPC environment. Furthermore, the deliverable describes how switching to an automatic tuning approach using a Black Box Optimizer tool (based on genetic algorithms) can greatly reduce the effort needed in the beginning of the project for manually tuning parallel programs. Recommendations (lessons learned, best practices, …) are given for the software packages we dealt with.

-

- This deliverable is represented by the HHCC website.

-

- This deliverable documents the results of pilot studies we established together with scientists as success stories. These studies support re-writing of existing codes and tuning of parallel programs. The pilot studies are selected to represent exemplary applications in order to make the results transferable in generalised form to similar problems. The collection of the success stories covers topics such as encouraging HPC users to use integrated development environments (IDEs) for the program development, teaching important software engineering concepts based on a tutorial, finding an insidious bug in a large Fortran program, achieving performance improvements for R programs, and automatically finding the parameter combinations for building and running parallel applications that give the best benchmark results using a Black Box Optimizer Tool, which is based on genetic algorithms.

Publications

- Towards an HPC Certification Program (Julian Kunkel, Kai Himstedt, Nathanael Hübbe, Hinnerk Stüben, Sandra Schröder, Michael Kuhn, Matthias Riebisch, Stephan Olbrich, Thomas Ludwig, Weronika Filinger, Jean-Thomas Acquaviva, Anja Gerbes, Lev Lafayette), In Journal of Computational Science Education, (Editors: Steven I. Gordon), ISSN: 2153-4136, 2019-01

Publication details – URL – DOI

Posters

- Performance Conscious HPC (PeCoH) – 2019 (Kai Himstedt, Nathanael Hübbe, Sandra Schröder, Hendryk Bockelmann, Michael Kuhn, Julian Kunkel, Thomas Ludwig, Stephan Olbrich, Matthias Riebisch, Markus Stammberger, Hinnerk Stüben), Frankfurt, Germany, ISC High Performance 2019, 2019-06-18

Publication details – URL – Publication

- Performance Conscious HPC (PeCoH) – 2018 (Kai Himstedt, Nathanael Hübbe, Sandra Schröder, Hendryk Bockelmann, Michael Kuhn, Julian Kunkel, Thomas Ludwig, Stephan Olbrich, Matthias Riebisch, Markus Stammberger, Hinnerk Stüben), Frankfurt, Germany, ISC High Performance 2018, 2018-06-26

Publication details – URL – Publication

- Performance Conscious HPC (PeCoH) (Julian Kunkel, Michael Kuhn, Thomas Ludwig, Matthias Riebisch, Stephan Olbrich, Hinnerk Stüben, Kai Himstedt, Hendryk Bockelmann, Markus Stammberger), Frankfurt, Germany, ISC High Performance 2017, 2017-06-20

Publication details – URL – Publication

Talks

| Date | File | Context | Location |

|---|---|---|---|

| 2019-10-18 | Presentation of the status of the project | 9th HPC-Status Conference of the Gauß Allianz | Paderborn (Germany) |

| 2019-07-31 | Presentation of the PeCoH project, skill tree, and content production workflow | Workshop on HPC Training, Education, and Documentation | Hamburg (Germany) |

| 2019-06-18 | Presentation of the skill tree and the PeCoH project | BoF ISC 19: International HPC Certification Program | Frankfurt (Germany) |

| 2019-03-26 | Presentation of the PeCoH project | Performance Engineering Workshop | Dresden (Germany) |

| 2018-10-09 | Presentation of the status of the project | 8th HPC-Status Conference of the Gauß Allianz | Erlangen (Germany) |

| 2017-12-04 | Presentation of the work in progress | 7th HPC-Status Conference of the Gauß Allianz | Stuttgart (Germany) |

| 2017-07-20 | Presentation of the PeCoH project | FEPA workshop | Erlangen (Germany) |

Other publications

| Date | File | Context | Location |

|---|---|---|---|

| 2018-06-01 | Concept Paper for the HPC Certification Program (Draft Version 0.91 – June 1, 2018) | PeCoH | Hamburg (Germany) |

| 2018-02-14 | Concept Paper for the HPC Certification Program (Draft Version 0.9 – February 1, 2018) | PeCoH | Hamburg (Germany) |

| 2017-11-12 | Handout about the work in progress of our HPC Certification Program | SC-17 | Denver/Colorado (USA) |

| 2017-06-18 | Handout about the work in progress of our HPC Certification Program | ISC-17 | Frankfurt (Germany) |